Hawaslsh

Full Member level 3

- Joined

- Mar 13, 2015

- Messages

- 171

- Helped

- 5

- Reputation

- 10

- Reaction score

- 7

- Trophy points

- 1,298

- Location

- Washington DC, USA

- Activity points

- 3,527

Hello,

I am working with a simple RF diode detector circuit followed by a high gain op-amp with input offset voltage compensation.

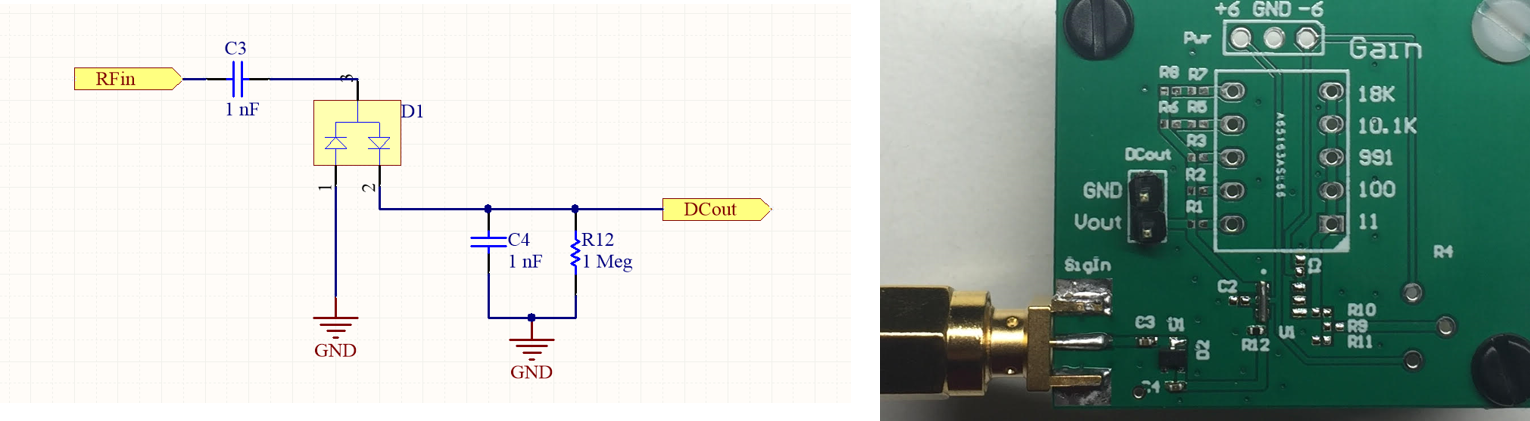

Above is a picture of the RF detection circuit alone. The circuit is capacitively coupled with a pair of diodes rectifying the input. A filter cap is used to create the DC voltage and the 1 Meg is there as a load. This works as expected, its pretty simple. Terminating the input with a 50 ohm load will cause the output voltage to drop close to 50 uV. If I apply a RF signal, the DCout voltage is proportional to the input power; for example at 11 GHZ, -20 dBm input power --> DCout = 40 mV.

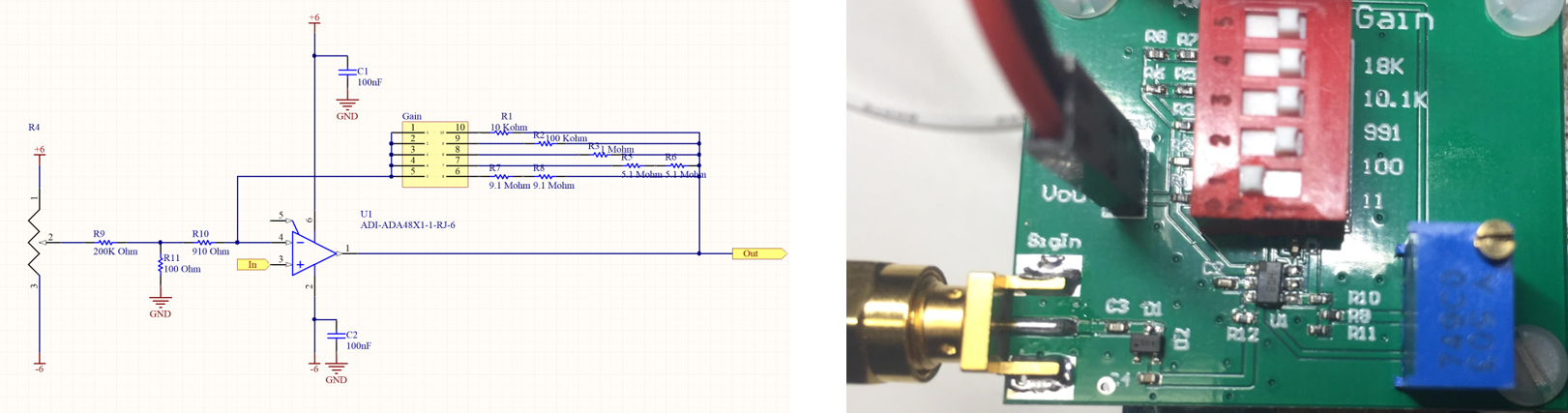

In reality I will be trying to detect much smaller powers than -20 dBm, and will need some pretty serious amplification to reach the 10 bit resolution of my 5V ADC. In order to do so, I added a non-inverting op-amp after the detection circuit. The topology came from an analog devices' application tutorial, and features an external offset voltage adjustment using a potentiometer. For my set of tests the feedback resistor was set to 10K Ideally giving me a gain of 11. I adjusted the pot to be as close to center (~0V) as possible.

Given the results from the detection circuit alone: I expected to see an output voltage of ~550uV with a 50 ohm termination on the input, and a ~440 mV output with a -20 dBm 11 GHz input. However, in reality, the op-amp sits at the positive rail. regardless of the RF input. If I probe the inputs to the amp directly, both sit at 549 mV, regardless of any input OR any adjustment on the pot. (As a note: The pot is working, I can measure the voltage change at the pot's pin 2.)

Clearly I am missing something pretty fundamental here. I dont know why the amp is behaving as it is. I am going to keep experimenting, however, i wanted to see if anyone had any suggestions to try or as to why?

Thanks in advance,

Sami

I am working with a simple RF diode detector circuit followed by a high gain op-amp with input offset voltage compensation.

Above is a picture of the RF detection circuit alone. The circuit is capacitively coupled with a pair of diodes rectifying the input. A filter cap is used to create the DC voltage and the 1 Meg is there as a load. This works as expected, its pretty simple. Terminating the input with a 50 ohm load will cause the output voltage to drop close to 50 uV. If I apply a RF signal, the DCout voltage is proportional to the input power; for example at 11 GHZ, -20 dBm input power --> DCout = 40 mV.

In reality I will be trying to detect much smaller powers than -20 dBm, and will need some pretty serious amplification to reach the 10 bit resolution of my 5V ADC. In order to do so, I added a non-inverting op-amp after the detection circuit. The topology came from an analog devices' application tutorial, and features an external offset voltage adjustment using a potentiometer. For my set of tests the feedback resistor was set to 10K Ideally giving me a gain of 11. I adjusted the pot to be as close to center (~0V) as possible.

Given the results from the detection circuit alone: I expected to see an output voltage of ~550uV with a 50 ohm termination on the input, and a ~440 mV output with a -20 dBm 11 GHz input. However, in reality, the op-amp sits at the positive rail. regardless of the RF input. If I probe the inputs to the amp directly, both sit at 549 mV, regardless of any input OR any adjustment on the pot. (As a note: The pot is working, I can measure the voltage change at the pot's pin 2.)

Clearly I am missing something pretty fundamental here. I dont know why the amp is behaving as it is. I am going to keep experimenting, however, i wanted to see if anyone had any suggestions to try or as to why?

Thanks in advance,

Sami