cannibol_90

Member level 5

- Joined

- Jun 20, 2009

- Messages

- 83

- Helped

- 5

- Reputation

- 10

- Reaction score

- 5

- Trophy points

- 1,288

- Activity points

- 2,066

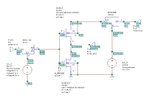

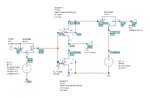

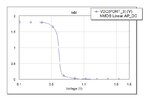

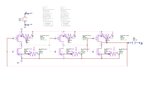

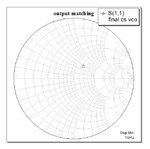

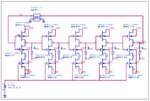

Now, we are getting a linear output for the CS-VCO from 1GHz to 5GHz. This is when we attached M_Probe (Measurement Probe) to view the output voltage with respect to time. But, attaching a 50 Ohm port collapses the output because of the impedance mismatch? From what we have seen, a 50 Ohm port pulls current (in mA) into it. To nullify this current, we had to increase the port impedance to as high as 100 Mega Ohm to 1 Giga Ohm. We need a 50 Ohm port to check for Oscillator noise, Tuning Range, Output power spectrum etc. As 1 GOhm is impractical for real life scenario, what are we doing wrong? Or are the parameters to be blamed for? Or, is any matching circuit needed here?