mtwieg

Advanced Member level 6

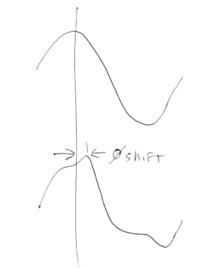

Hello, I've built a a 64MHz 4W class A PA for use in a MRI system, and I've recently discovered that its phase shift changes depending on the input and output power, by about 30 degrees over its full range. This is frustrating, because I need to vary amplitude and phase on the output with a good degree of accuracy. To be specific, I'm making short RF bursts which generally differ by 6dB in power, and must be 90 degrees out of phase. I have a feeling that this is a problem that linear amplifiers will always have to some degree. So I'm considering making a class D current fed amp, which would be amplitude modulated by its drain voltage. Would a switching amp have less phase variation than linear amps?

Also, I'd like to have a way to measure the phase between two RF pulses, but I'm not sure how. I have a nice oscilloscope, but using its cursors seems like a sloppy method. I also have a good signal analyzer, but I don't how I could possible use it to measure relative phase. Any advice?

Also, I'd like to have a way to measure the phase between two RF pulses, but I'm not sure how. I have a nice oscilloscope, but using its cursors seems like a sloppy method. I also have a good signal analyzer, but I don't how I could possible use it to measure relative phase. Any advice?