overmars

Member level 3

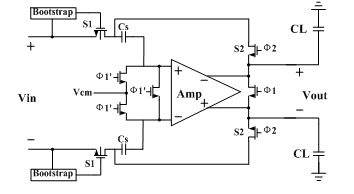

I designed a 14-bit 50Msps pipelined ADC, I used fully differential flip-around THA, and the virtual ground voltage set to Vcm as shown in the figure. If I vary Vcm, the whole accuracy of my ADC varied accordingly. But ref to the thoery, the Vcm will not affect the conversion accuracy. So where's the problem ?

The pipeline is a 3.5bit of first stage , and 8*1.5bit/stage with final 3-bit of last stage.

When doing post-layout simulation, the offset seems to be 10LSB and gain error 5LSB which is out of requirement. Then I vary the reference and set the positive and negative ref externally, but the offset and gain error do not reduce. Why is that?

---------- Post added at 10:37 ---------- Previous post was at 10:34 ----------

figure

The pipeline is a 3.5bit of first stage , and 8*1.5bit/stage with final 3-bit of last stage.

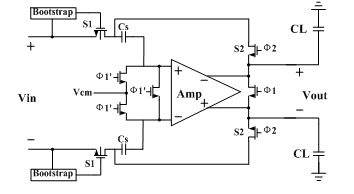

When doing post-layout simulation, the offset seems to be 10LSB and gain error 5LSB which is out of requirement. Then I vary the reference and set the positive and negative ref externally, but the offset and gain error do not reduce. Why is that?

---------- Post added at 10:37 ---------- Previous post was at 10:34 ----------

figure